Gated Recurrent Units | Python GUI

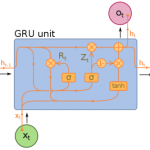

Gated Recurrent Units (GRUs) are a type of recurrent neural network (RNN) architecture designed to address the issue of the vanishing gradient problem in traditional RNNs. GRUs have a simpler architecture than LSTMs, which makes them faster and easier to train. If you want to explore more about Gated Recurrent Units, take a look at this blog.